Hormonal regulation of behavioral and emotional persistence: Novel insights from a systems-level approach to neuroendocrinology

Asokan, M.M. and Falkner, A.L., Neurobiology of Learning and Memory 2025

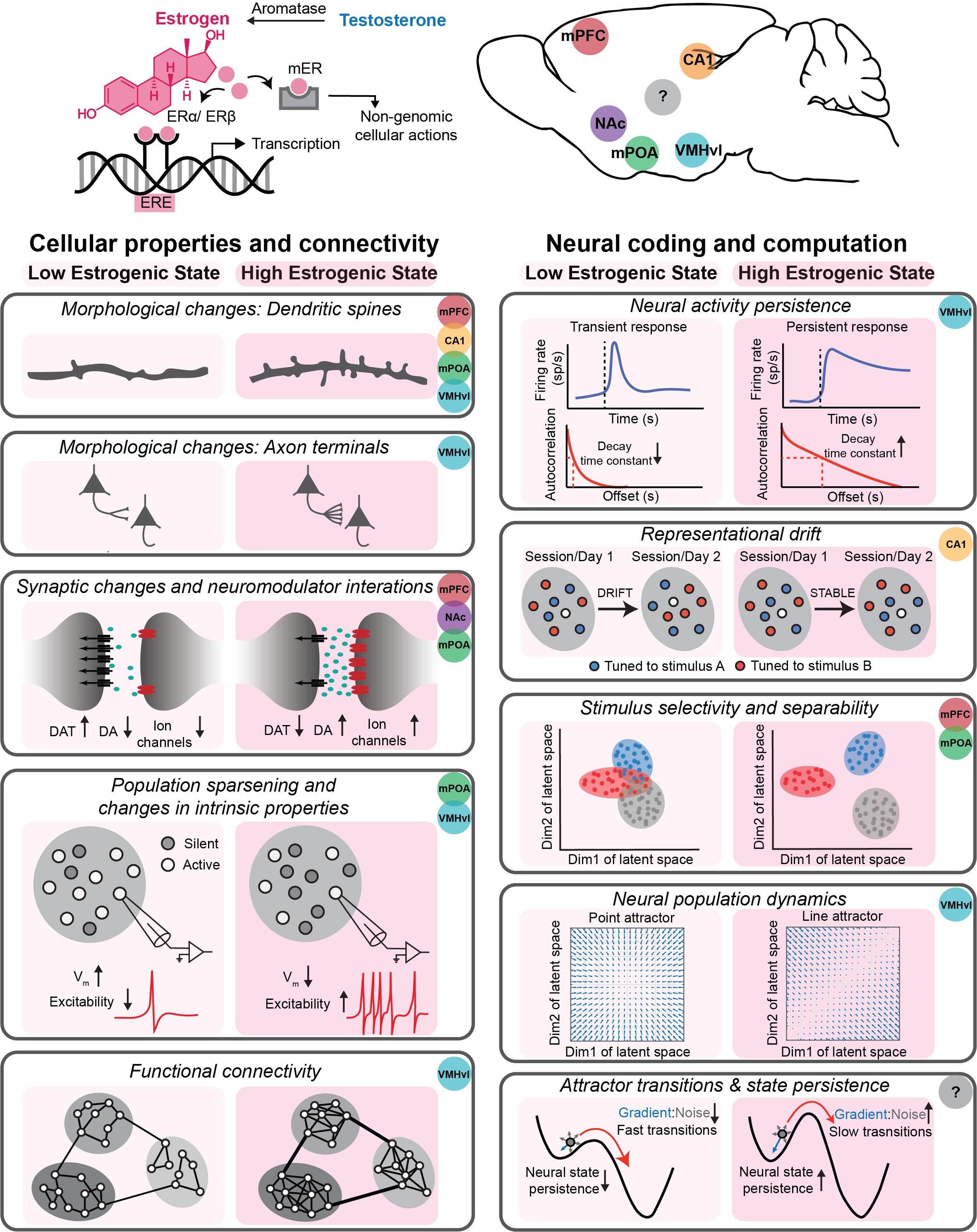

Gonadal sex steroid hormones such as estrogen and testosterone regulate internal states, social drive, perception of external cues, and learning and memory. Fluctuating hormones influence mood and emotional states, enabling flexibility in instinctive behaviors and cognitive decisions. Conversely, elevated hormone levels help sustain emotional states and behavioral choices, ensuring the precise execution of costly social behaviors within optimal time windows to maximize reproductive success. While decades of work have shed light on the cellular and molecular mechanisms by which sex hormones alter neural excitability and circuit architecture, recent work has begun to tie many of these changes to principles of computation using the tools of systems neuroscience. In this review we outline the mechanisms by which sex steroid hormones alter neural functioning at the molecular and cellular level and highlight recent work that points towards changes in specific computational functions, including the generation and maintenance of neural and behavioral persistence. My current postdoctoral work demonstrates that estrogen reshapes female social preference and choice persistence by remodeling cortical function across multiple levels, from underlying functional connectivity to neural coding and emergent network dynamics.

Potentiation of cholinergic and corticofugal inputs to the lateral amygdala in threat learning

Asokan, M.M., Watanabe, Y., Kimchi, E.Y., and Polley, D.B., Cell Reports 2023

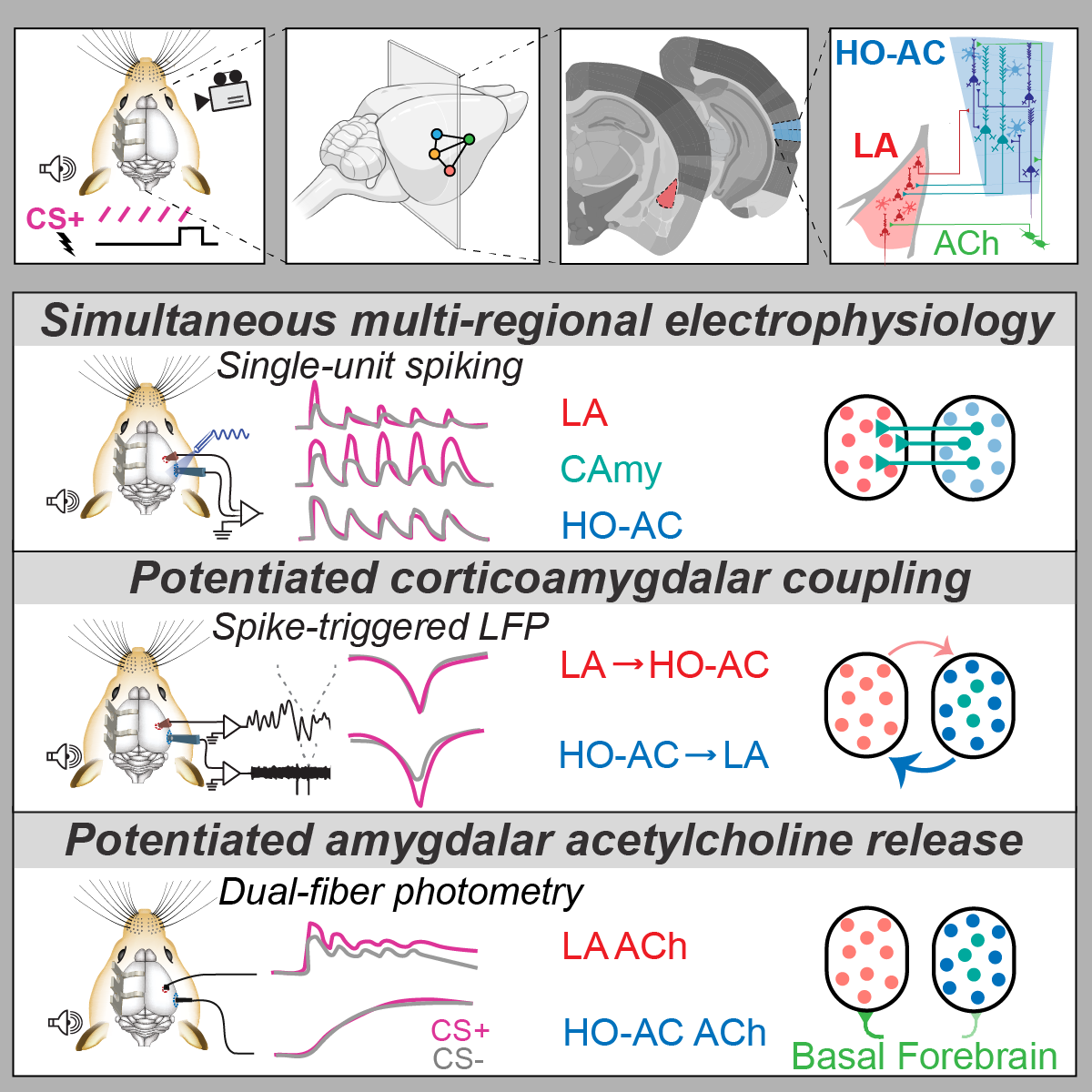

The amygdala, cholinergic basal forebrain, and higher-order auditory cortex (HO-AC) regulate brain-wide plasticity underlying auditory threat learning. Here, we perform multi-regional extracellular recordings and optical measurements of acetylcholine (ACh) release to characterize the development of discriminative plasticity within and between these brain regions as mice acquire and recall auditory threat memories. Spiking responses are potentiated for sounds paired with shock (CS+) in the lateral amygdala (LA) and optogenetically identified corticoamygdalar projection neurons, although not in neighboring HO-AC units. Spike- or optogenetically triggered local field potentials reveal enhanced corticofugal—but not corticopetal—functional coupling between HO-AC and LA during threat memory recall that is correlated with pupil-indexed memory strength. We also note robust sound-evoked ACh release that rapidly potentiates for the CS+ in LA but habituates across sessions in HO-AC. These findings highlight a distributed and cooperative plasticity in LA inputs as mice learn to reappraise neutral stimuli as possible threats. I presented this last chapter of my thesis as posters at the Optogenetics GRC 2022 and APAN 2021.

Inverted central auditory hierarchies for encoding local intervals and global temporal patterns

Asokan, M.M., Williamson, R.S., Hancock, K.E. and Polley, D.B., Current Biology 2021

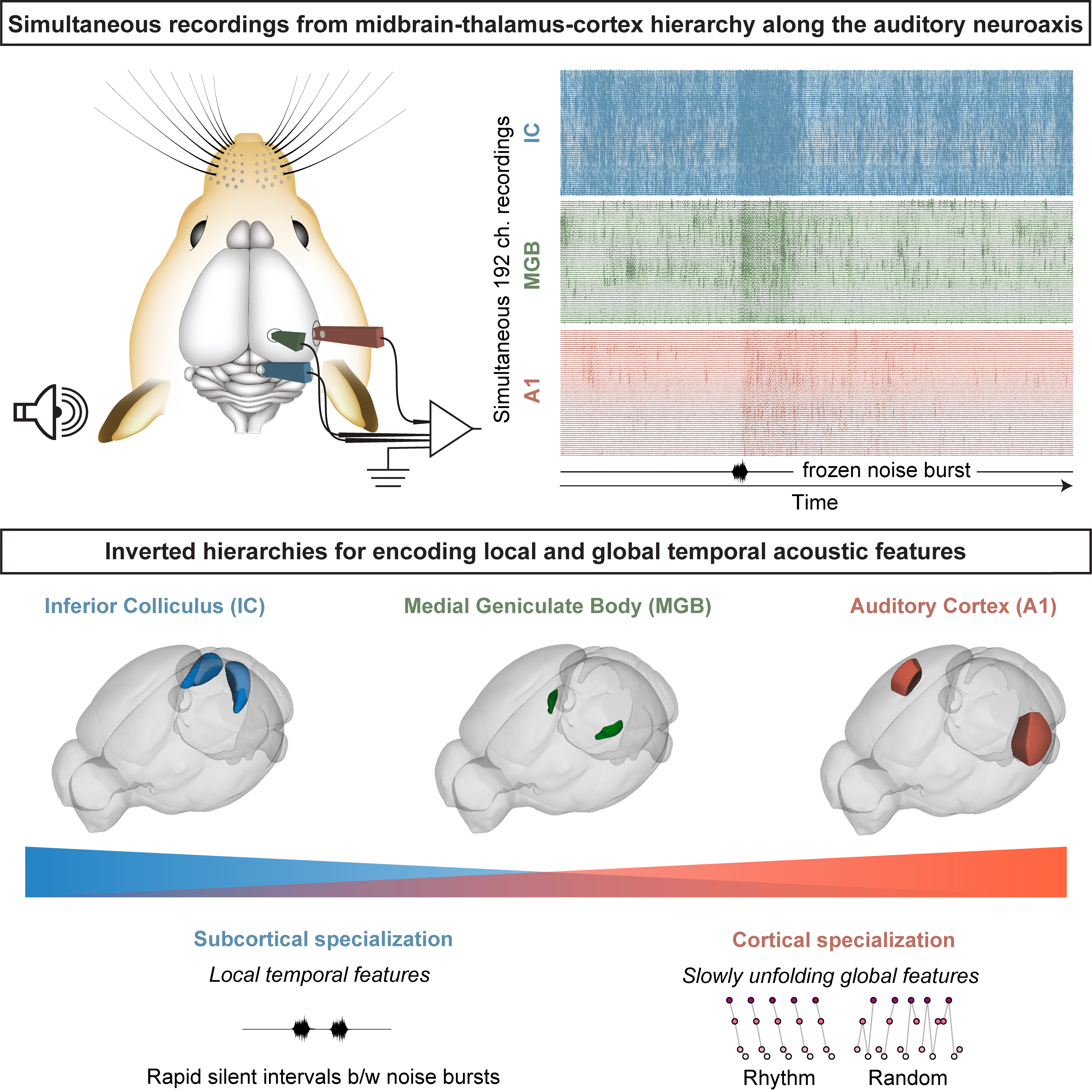

With simultaneous unit recordings from the mouse auditory midbrain, thalamus, and cortex, we look at transformation of neural coding along the auditory neuro-axis and we find inverted hierarchies in neural coding of sensory features with different temporal scales. We observed that the decay constants for sound-evoked spiking increased from the midbrain to cortex, and that the cortical timescale was itself shaped by slower changes in stimulus context, such that rhythmic, predictable sequences featured more orderly first spike latencies and more strongly dampened spiking decay. These findings show that low-level auditory neurons with fast timescales encode isolated sound features but not the longer gestalt, while the extended timescales in higher-level areas can facilitate sensitivity to slower contextual changes in the sensory environment. I presented this work in a number of conferences including NCCD 2019, Cosyne 2020, and APAN 2020 where I was awarded for one of the best oral presentations.

Sensory overamplification in layer 5 auditory corticofugal projection neurons following cochlear nerve synaptic damage

Asokan, M.M., Williamson, R.S., Hancock, K.E. and Polley, D.B., Nature Communications 2018

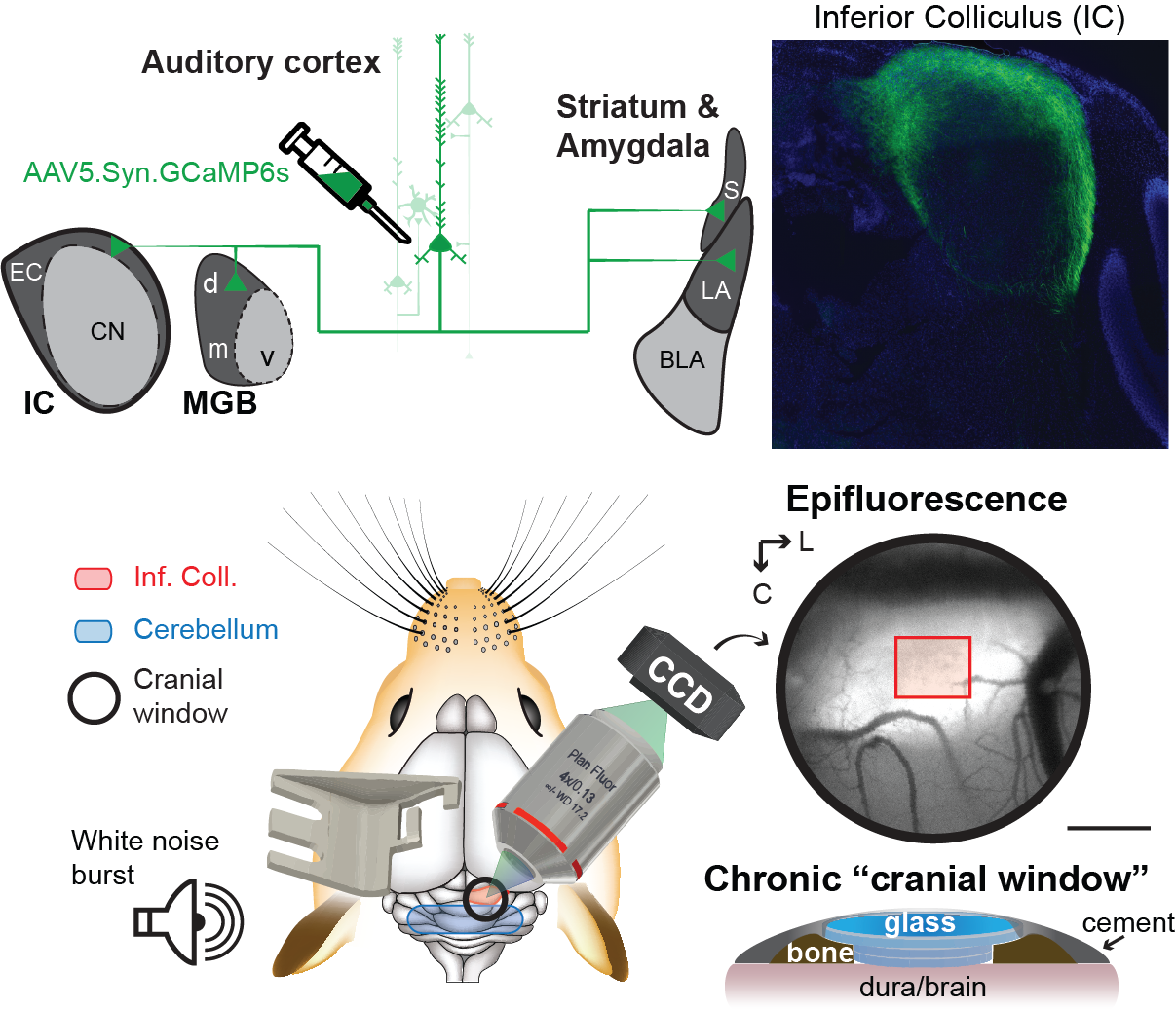

Layer 5 (L5) cortical projection neurons innervate far-ranging brain areas including inferior colliculus (IC), thalamus, lateral amygdala and striatum to coordinate integrative sensory processing and adaptive behaviors. We track daily changes in sound processing using chronic widefield calcium imaging of L5 axon terminals on the dorsal cap of the IC in awake, adult mice. After noise-induced damage of cochlear afferent synapses, the corticocollicular response gain rebounded above baseline levels by the following day and remained elevated for several weeks despite a persistent reduction in auditory nerve input. Sustained potentiation of these projection neurons that innervate multiple limbic and subcortical auditory centers may underlie the increased anxiety, stress and other forms of mood dysregulation experienced by subjects who acquire perceptual disorders such as tinnitus and hyperacusis after hearing loss. I presented this work as a poster at SfN 2017, and also received travel awards to present the work as podium talks at ICAC 2017 and ARO 2018.

Behavioral approaches to reveal top-down influences on active listening

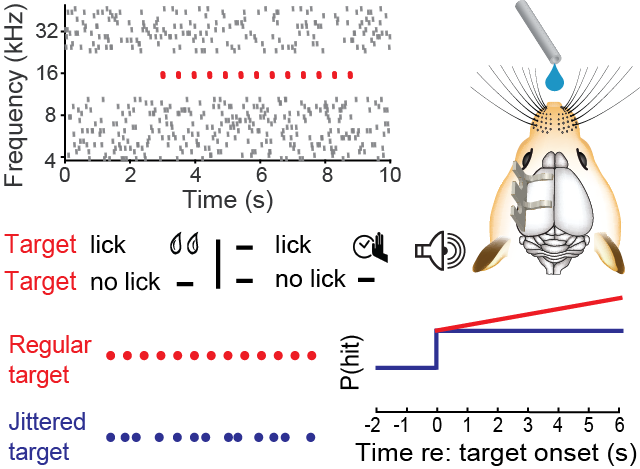

Clayton, K.K, Asokan, M.M., Watanabe, Y., Hancock, K.E. and Polley, D.B., Frontiers in Neuroscience 2021

Knowing when to listen can enhance the detection of faint sounds or the discrimination of target sounds from distractors. We found that periodicity aided the detectability of a liminal repeating target in a complex background noise by increasing the probability of late detection events, after the regularity of the target sound had been established. The prolonged time course of repetition-based stream segregation suggests a mechanism by which repetitive inputs are integrated over time and used to predict the incoming acoustic signal. Our work provides behavioral proof-of-principle for future studies to uncover the neural substrates of this prolonged temporal integration process in a genetically-tractable model organism.